Kartik Audhkhasi is a PhD graduate from the Ming Hsieh Department of Electrical Engineering working on Neural Networks at IBM.

On April 10th, the EE department is hosting our first Emerging Trends seminar. Meant to bring together the diverse areas of EE around a single topic and encourage collaboration, the first seminar features Professor Jay Kuo, who will speak about neural networks and deep learning. To highlight neural networks, we’ll be running three blogs this week by different department community members.

In this second blog, EE alum and former Ming Hsieh Institute Scholar, Kartik Audhkhasi shares his experience working at IBM and how electrical engineers are perfectly positioned to address this new technology.

By Kartik Audhkhasi

“The machines are coming!”

You are more likely to hear this from the press these days instead of the hero of a Hollywood sci-fi movie. The interest around neural networks, artificial intelligence, and “deep learning” is at an all-time high. What distinguishes this exuberance from previous bouts of hysteria? The answer is that today we actually have a large number of real-world applications driven by neural networks.

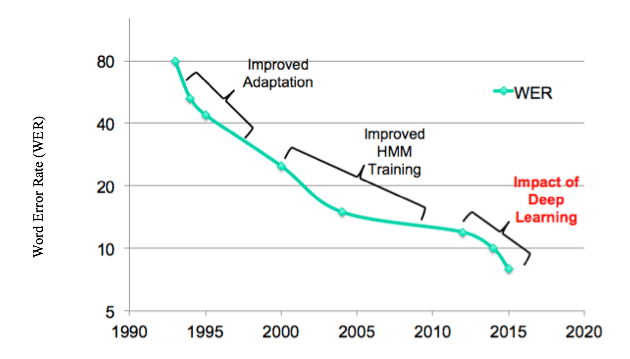

Take the field I work in at IBM: Automatic Speech Recognition (ASR). Figure 1 shows the progress of the error rate of automatically recognizing English conversational speech on a public benchmark test set over the past few decades, as the technology has evolved. The use of neural networks has led to historic improvements in performance over a short time span. IBM’s state-of-the-art ASR system heavily uses neural networks and is now nearly as good as humans on this task. Other fields such as computer vision have seen similar unprecedented jumps in performance upon using neural networks. While in the past, the potential of neural networks was largely restricted to research settings, today we are living in a world being changed by them.

Figure 1: This figure shows the progress of ASR word error rate (WER) over the last two decades on a public benchmark test set for English conversational speech. Improved adaptation and training of hidden Markov models (HMMs) resulted in significant progress till around 2005. The performance reached a plateau between 2005 and 2012. The introduction of deep learning around 2012 led to a sharp improvement in performance.

Most artificial intelligence (AI) problems can be mathematically written as the task of learning a transformation “f” that best maps input data “x” to a desired output data “y”. For example, in my field of ASR research, x is the input speech signal and y is the sequence of words. The ASR system is thus a function f that maps input speech to a sequence of words. The ultimate goal of AI is learning a function f for each real-world task that performs as close as possible to humans on that task.

The success of this endeavor is contingent on the choice of the set of functions to which f belongs. Neural networks are an extremely powerful set of functions that can approximate almost any real-world function of interest. This “universal approximation” capability of neural networks makes them very attractive for several applications. A neural network contains a sequence of simple mathematical operations such as addition, multiplication, and non-linear functions that transform the input data x into the desired output y. Training such a neural network needs a huge data set of many (x,y) pairs. The neural network takes each input data x and makes its best guess of the output, y*. The aim of learning is to update the parameters of the neural network such as the error y – y* is as small as possible. The famous “back-propagation algorithm” computes these parameters updates using nothing more than basic calculus.

It is interesting to note that the back-propagation algorithm was developed around 4 decades ago and has not changed significantly since. What has changed is amount of data and computing resources we have. This is the first factor that has contributed to a neural network revolution.

Neural networks and AI shouldn’t be seen as a technology that will replace humanity. Instead, it can lead to an era of greatly improved human understanding and communication.

The second, and equally important factor, is access. Until recently, only universities and large corporations had access to the computing resources and data needed to do work in this field. Recently, several high-level programming toolkits (such as Theano, Torch, and Tensorflow) which provide Graphics Processing Unit-based computing have leveled the playing field. This has reduced time lags between the conception of a research idea, implementing it, showing proof-of-concept, building a real-world product, and deploying that product for mass consumption. As the process has become a bit more democratized, more people can try out all kinds of new ideas, leading to more advances.

This is an especially exciting time to be an electrical engineer. More than anyone else, we sit at the intersection of science, engineering, applications, and data, which provides us with the right mix of skills to succeed in this new age of neural networks.

I believe we will see a tighter integration between neural networks and human intelligence in the future. While neural networks are better at rapidly processing large amounts of data, there are several important problems at which humans are significantly better. These include reasoning, logic, and detecting the behavior and emotions of other humans. I believe that future neural systems will use more human expertise in the loop – not just during the design/training phase, but also while operating in the field. Neural networks and AI shouldn’t be seen as a technology that will replace humanity. Instead, it can lead to an era of greatly improved human understanding and communication.

Kartik Audhkhasi is a Research Staff Member at IBM Research in Yorktown Heights, NY and an alum of the Ming Hsieh Department of Electrical Engineering, where he received his PhD in summer 2014. He currently works on neural networks, automatic speech recognition, and natural language processing as part of the Speech Algorithms and Models group at IBM Research.

Published on April 5th, 2017

Last updated on April 7th, 2021