On April 10th, the EE department is hosting our first Emerging Trends seminar. Meant to bring together the diverse areas of EE around a single topic and encourage collaboration, the first seminar features Professor Jay Kuo, who will speak about neural networks and deep learning. To highlight neural networks, we’ll be running three blogs this week by different department community members.

In this third blog, EE undergraduate senior and Stamps Leadership Scholar, Eric Deng shares how machine learning turns data into wisdom and what that means for predicting what people will do next.

By Eric Deng

Machine learning is one of the hottest tech fields today, and deservingly so – the applications of machine learning, when implemented properly and effectively, are boundless. Already used heavily in areas like computer vision and pattern recognition, new advancements are getting the technology closer than ever to predicting the behavior of the most unpredictable of subjects – humans.

First, a quick overview about how machine learning takes in, interprets, and applies data:

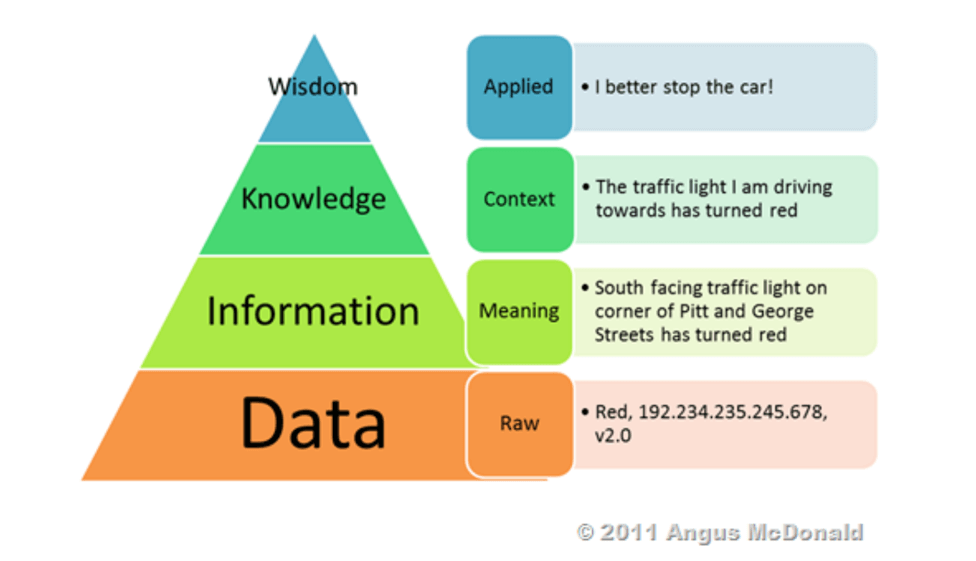

Data used as input for these machine learning problems is often classified into two categories: labeled and unlabeled. When it comes to representation of knowledge in computational systems, content can be broken down into four levels of complexity–(1) Data, (2) Information, (3) Knowledge, and (4) Wisdom. The difference between these varying levels of knowledge representation is the amount of interpretation that has been done to the content. The main difference in these two types of data is how much your input data has been pre-interpreted. Unlabeled datasets, in the context of the “pyramid” structure of information, falls into the “Data” classification while labeled dataset can be any one of the other three categories although almost always labeled data sets fall into the “Information” category. This is all fine, except that what your application really desires is knowledge and/or wisdom.

One of the most difficult parts of machine learning problems is feature selection, the process of selecting the set of features in a dataset that is most effective in representing characteristics of the data set that one is interested in. Even in cases where you have access to information, translating that information to knowledge and wisdom is not an easy task. Which bits of information are important? How important are they relative to other information? Does a piece of information’s value change in different contexts? There are a number of manual feature selection approaches that people traditionally use but as data gets more and more complex and massive, these approaches become less and less feasible to implement.

As a researcher and engineer, I am very interested in human-centered applications of technology. This includes personalized learning, healthcare, and even entertainment. The feature selection problem is especially difficult when it comes to humans. There are tremendous amounts of data that can be collected (,i.e. Bio-signals, physical movement, speech, gaze behaviors) and analyzed. And in each application the information that you want to extract from the many data inputs are different and the feature selection process needs to start from scratch.

Deep learning approaches have demonstrated fascinating results in this space. Feature learning allows for systems to automatically determine features given a large data set and then, using more high-level information of the data, systems can help with determining which features are important through experimentation.

As I mentioned above, deep learning has shown lots of promise is predicting human behavior. Humans are inherently probabilistic beings in that, even given all contextual knowledge, it is theoretically still impossible to predict what we’ll do next. As an undergrad in college, I’ve witnessed this phenomenon firsthand.

But by “watching” human behavior, deep learning systems have been able to predict our next move in constrained situations. Two examples of this are the Predictive Vision system from MIT that can predict whether two people will hug, kiss, or high-five by watching television shows and the Brains4Cars project that predicts driver behavior based on cameras and wearables.

Deep learning has already been shown to be highly effective in many fields. As it’s being adapted to be used as tools in many other fields, I’m very excited to see what the technology can do in allowing us to build more adaptive and effective systems for human users.

Eric Deng is a junior in the Ming Hsieh Department of Electrical Engineering and a Progressive Degree Program student in Product Development in the Aerospace and Mechanical Engineering. Eric spent his last two summers as an intern at Facebook and is currently a research assistant in Prof. Maja Mataric’s Interaction Lab.

Published on April 7th, 2017

Last updated on January 22nd, 2018