Speech Processing & Conversational AI

A Peek Into the Startup Scene

Wednesday, March 7th, 2pm @EEB 132

Three distinguished alumni of the Ming Hsieh Department of Electrical Engineering return home to share their experiences in the startup world. Each presentation will give attendees insights into what they do and how they got there. Presentations will be followed by a discussion addressing the challenges that startups face.

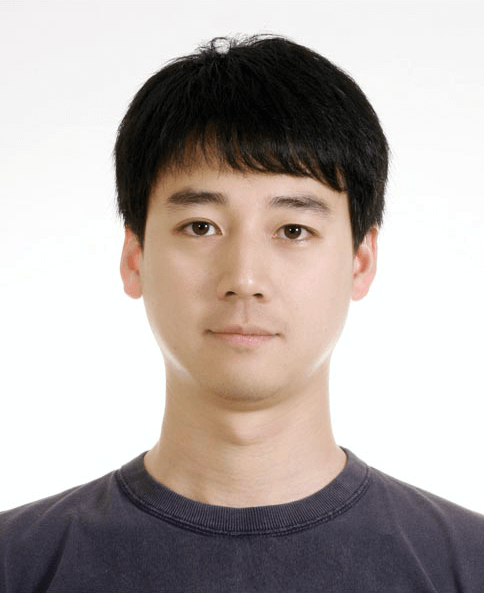

Dr. Samuel Kim - Senior Speech Specialist at Gridspace

The Gridspace Sift conversational search system is designed to search through billions of minutes of long-form, conversational speech data. The core technology allows for complex searches that combine semantic and signal information, and a method for executing constraints on time, logical structure, and metadata. The system utilizes specialized word and signal embeddings and methods to transform compound queries (boolean, set operations, and temporal operators) into matrix operations performed in a highly-distributed manner.

Dr. Kyu Jeong Han - Principal Machine Learning Scientist at Capio Inc.

Capio has been one of the leading research forces in industry lately, competing with IBM and Microsoft, in getting the word error rate of conversational speech recognition systems closer to that of human transcriptions. In this talk, we share the latest results of the Capio 2017 conversational speech recognition system on the industry standard Switchboard/CallHome test sets and how they square off with those from the other state-of-the-art systems. In addition, the perspectives to compare and contrast human and machine transcriptions will be given, leading open discussions on how far or close machines are compared to human ability in transcribing conversational speech.

Dr. Jangwon Kim - VP of Research at Canary Speech

Speech signal has rich information about the speaker's state. I at Canary Speech develop healthcare applications, using speech and language technologies, in order to improve the quality of healthcare services. Specifically, we design speech data collection protocols, build mobile/web applications, and build back-end classification or regression/ranking models for the automatic judgment (or early warning) of cognitive diseases and impairment, motor control disorder and mental state. This talk will describe speech and language technology used in our system, as well as various issues and challenges in R&D in the healthcare industry.